- Our Work

-

-

PORTFOLIO

OTHERS

-

-

- Services

-

- How We Work

-

- Resources

-

- About Us

-

- Contact Us

- Let’s Talk

In the past decade, cloud computing has powered nearly everything — from mobile apps and SaaS platforms to AI tools and enterprise systems. But something is quietly reshaping how modern applications perform.

That shift is edge computing.

While cloud infrastructure remains critical, edge computing is redefining how fast, reliable, and intelligent applications can become. Businesses focused on performance, real-time processing, and AI-driven workflows are increasingly moving workloads closer to users.

At Finally Free Productions, we’re seeing this shift firsthand — especially in AI-powered applications, real-time dashboards, and mobile-first platforms.

Let’s break down why edge computing is changing app performance — and why it matters more than most companies realize.

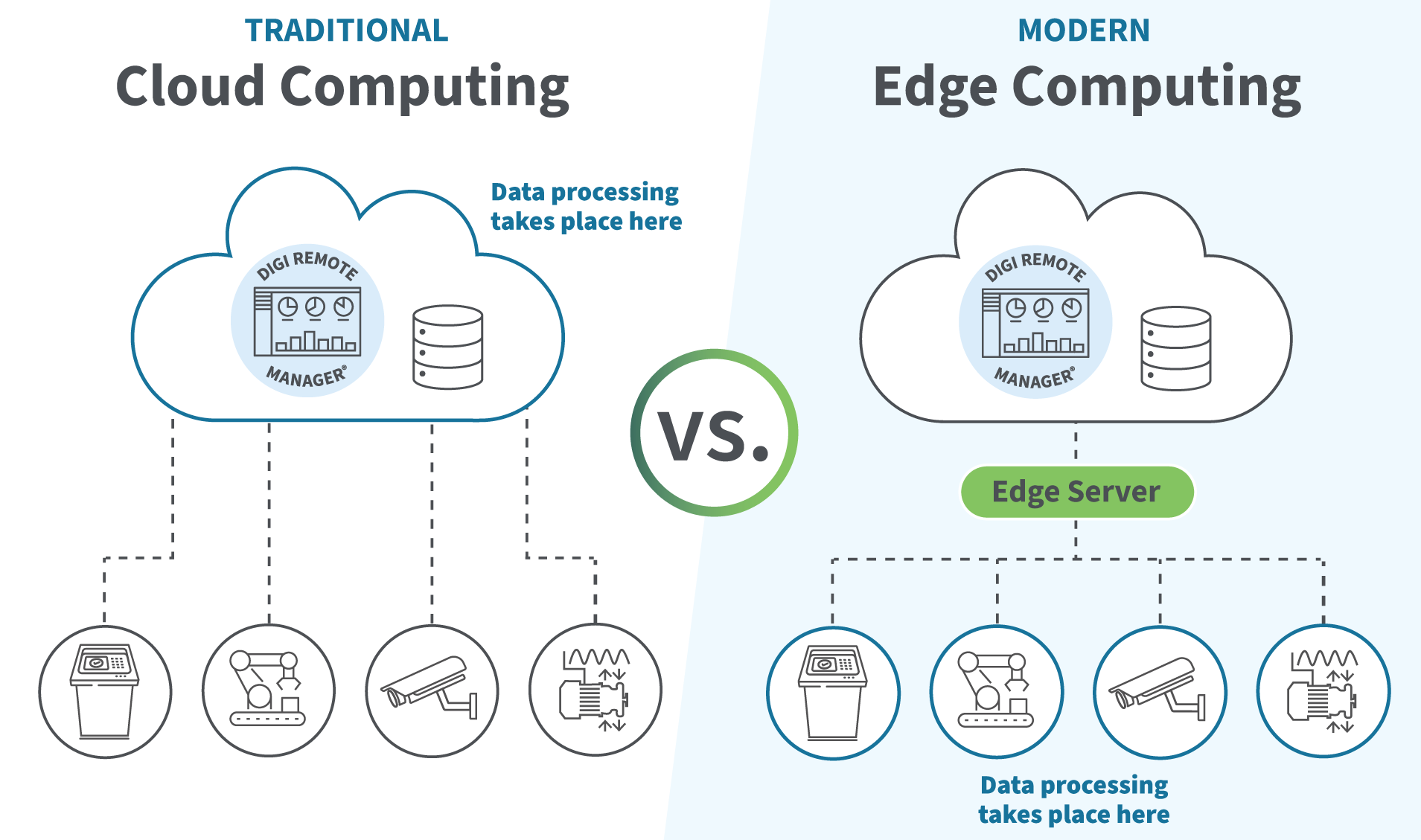

What Is Edge Computing?

Edge computing is a distributed computing model where data processing happens closer to the user or device — instead of relying entirely on centralized cloud servers.

Instead of this:

User → Cloud Data Center → Response

It becomes:

User → Nearby Edge Node → Response

By reducing the physical and network distance data must travel, applications experience:

Lower latency

Faster load times

Improved reliability

Reduced bandwidth usage

Better real-time responsiveness

In simple terms: less travel time = better performance.

Why Cloud-Only Architectures Are Hitting Limits

Traditional cloud models work well for centralized storage and large-scale computation. However, modern apps now demand:

Real-time AI processing

Instant messaging & collaboration

IoT device communication

AR/VR responsiveness

High-frequency transactions

Global user bases

When users are geographically far from data centers, even milliseconds of delay can impact:

User experience

App responsiveness

Conversion rates

Engagement

Retention

In high-performance environments, latency isn’t just a technical issue — it’s a business issue.

How Edge Computing Improves App Performance

1. Ultra-Low Latency

Edge servers process data near users, dramatically reducing round-trip time.

This is especially powerful for:

Fintech apps

Real-time dashboards

Multiplayer gaming

Live collaboration tools

AI assistants

2. Smarter AI at the Edge

AI models can now run inference at the edge — meaning decisions happen instantly without sending every request to a central server.

Benefits include:

Faster AI responses

Lower cloud costs

Enhanced privacy

Reduced data transfer

This is becoming crucial in modern AI systems and cross-platform applications.

3. Improved Reliability

If a central cloud region goes down, edge nodes can continue operating independently.

This makes systems:

More fault-tolerant

More scalable

More resilient

Distributed systems are no longer optional for mission-critical applications.

4. Better Mobile & Cross-Platform Performance

Mobile users expect instant performance.

Edge computing reduces:

API wait times

Data fetch delays

App startup lag

For cross-platform development frameworks, performance optimization increasingly includes edge-based content delivery and serverless edge functions.

Industries Already Benefiting from Edge Computing

Healthcare – Real-time patient monitoring

Retail – Smart checkout & localized personalization

Finance – Fraud detection & transaction validation

Manufacturing – IoT machine analytics

Smart Cities – Traffic and energy optimization

Edge computing is quietly becoming foundational infrastructure.

Edge + Cloud: Not a Replacement — A Hybrid Evolution

Edge computing doesn’t replace cloud computing. It enhances it.

The modern architecture looks like:

Edge → Real-time processing

Cloud → Heavy computation & storage

Hybrid orchestration → Intelligent workload distribution

This hybrid model allows apps to be both powerful and fast.

Why Businesses Should Pay Attention Now

Here’s what makes edge computing strategically important in 2026:

Users expect instant experiences

AI applications demand real-time inference

IoT ecosystems are expanding

Global apps require distributed infrastructure

Performance directly affects revenue

Companies that optimize architecture early gain a major competitive advantage.

Is Edge Computing Right for Every App?

Not necessarily.

Edge computing becomes critical when:

Real-time performance is required

AI inference speed matters

Users are globally distributed

Bandwidth costs are high

System reliability is mission-critical

A thoughtful architecture strategy determines whether edge integration makes sense.

The Quiet Competitive Advantage

The reason edge computing is “quietly” changing app performance is because users don’t see it.

They only feel:

Faster responses

Smoother interactions

Instant AI feedback

Seamless scalability

Behind the scenes, distributed infrastructure is doing the work.

Final Thoughts: Performance Is Now an Architecture Decision

App performance is no longer just about frontend optimization or backend scaling.

It’s about where computation happens.

Edge computing represents a structural shift in how applications are built — especially for AI-powered platforms, enterprise dashboards, and cross-platform ecosystems.

At Finally Free Productions, we believe the next generation of high-performing applications will combine:

Intelligent cloud systems

Distributed edge infrastructure

AI-driven optimization

Performance-first architecture design

Because in today’s digital landscape, speed isn’t just convenience — it’s competitive advantage.

All

All AI & ML

AI & ML Healthcare

Healthcare Education

Education Games

Games Fitness

Fitness Business

Business Webapps

Webapps Websites

Websites More

More Startup

Startup Web3 Development

Web3 Development Capabilities

Capabilities Game Development

Game Development Website Development

Website Development Prototyping Services

Prototyping Services Mobile App Development

Mobile App Development Client Dashboard

Client Dashboard Design Process

Design Process IP Protection

IP Protection Project Management

Project Management Working with Finally Free

Working with Finally Free FAQ

FAQ How to Videos

How to Videos About FFP

About FFP Meet The Team

Meet The Team Press

Press